Could the AI “world model” be the most viable path to fully photorial and interactive VR?

Many of the “virtual reality” seen in science fiction are either wearing a headset or connecting a neural interface to enter an interactive virtual world that looks completely realistic. In contrast, high-end VR today may have relatively realistic graphics, but that is still not clearly realistic, and these virtual worlds need to build hundreds of thousands or millions of dollars with years of development. Worse, mainstream standalone VR has graphics, and in absolute best cases it looks like an early PS4 game, approaching the late PS2 on average.

With each new quest headset generation, Meta and Qualcomm doubled GPU performance. Impressive, this pass takes decades to achieve performance on today’s PC graphics cards. You don’t have to worry about going near photorealism. And much of the future profit will be spent on increasing resolution. Techniques like rendering eye-catching resolutions and neural upscaling are useful, but they can only be done so far.

Gaussian Splutting allows for optical realistic graphics in standalone VR, but splats only represent instantaneously, so they must be captured from the real world or pre-rendered as a 3D environment in the first place. Adding real-time interactivity requires a hybrid approach that incorporates traditional rendering.

But there may be a completely different path to a photorial interactive virtual world. One stranger has its own problems, but is potentially far more promising.

Yesterday, Google Deepmind revealed the Genie 3, an AI model that generates real-time interactive video streams from text prompts. It’s essentially a nearly photorealistic video game, but each frame is completely AI-generated and has no involvement in traditional rendering or image input.

Google calls the Genie 3 a “world model,” but can also be described as an interactive video model. Initial input is a text prompt, real-time input is a mouse and keyboard, and output is a video stream.

Like many other generator AI systems, what’s surprising about the Genie series is the incredible pace of progress.

The original Jeannie, revealed in early 2024, focused primarily on generating 2D side scrollers at a resolution of 256 x 256, and only had 2 or 2 seconds of sample clips displayed, as the world could only run a few dozen frames before glitching and filling it into inconsistent mess.

Demon 1from February 2024.

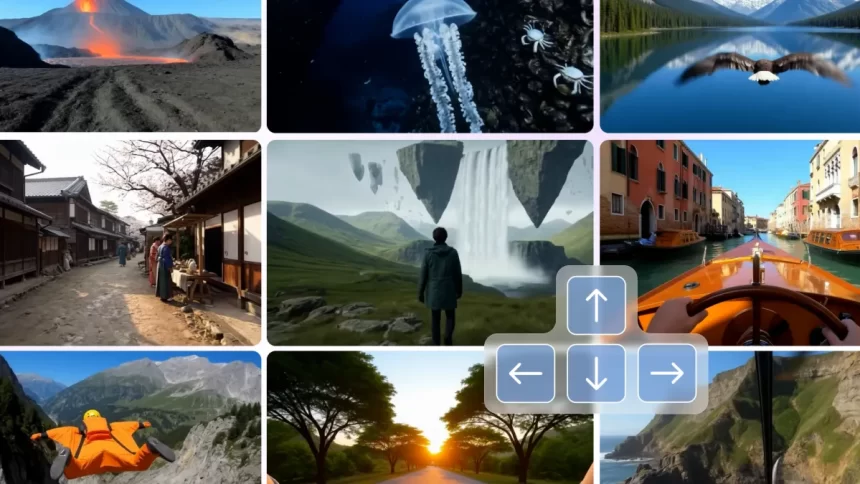

Then in December, Genie 2 surprised the AI industry by achieving a world model of 3D graphics. This was surprising using first person or third person control via standard mouse look and WASD or Arrow key controls. It outputs at 360p 15fps and can run for about 10-20 seconds, then the world begins to lose consistency.

The Genie 2’s output was also blurry, low polarity, and had a distinctly AI-generated appearance that could be recognized from older video generation models a few years ago.

December (left) and Genie 3 Jeanie 2

The Genie 3 is a big step forward. Outputs very realistic graphics at 720p 24fps, the environment is perfectly consistent for a minute and “almost” consistent for a few minutes.

If you’re still not sure what Genie 3 actually does, spell it out clearly. Enter a description of the virtual world you want and it will appear on the screen within seconds and pass through controls of standard keyboard and mouse movement.

And these virtual worlds are not static. The doors open as they approach them, and there are dynamic shadows for moving objects, and as the objects get in the way, you can even see physical interactions such as splashes and ripples in the water.

In this demo, the character’s boots on the ground appear to be in the way.

Without a doubt, the most fascinating aspect of the Genie 3 is that these behaviors emerge from the underlying AI models developed during training and are not pre-programmed. Human developers often spend months simulations of only one aspect of physics, but Genie 3 simply burns this knowledge into it. That’s why Google calls it the “world model.”

By specifying prompt interactions, more complex interactions can be achieved.

In one example clip, “POV action camera in a tanned house painted by a first-person agent with a paint roller” was entered, producing an essentially photorealistic wall painting minigame.

Prompt: “POV action camera in a tanned house painted by a first-person agent with paint rollers”

Genie 3 also adds support for “Speed World Events” from changing weather to adding new objects and characters.

These event prompts can come from the player via voice input or be pre-scheduled by the creators of the world.

This could one day allow for an almost endless variety of new content and events in a virtual world.

Genie 3’s “Speed World Events” are active.

Of course, the 720p 24fps is far below what modern gamers expect, and gameplay sessions last much longer than a minute or two. However, given the pace of progress, these basic technical limitations could disappear in the coming years.

When it comes to adapting models like Genie 3 to VR, other more common problems arise.

The model should take at least 6DOF head pose as input and as directional movement, ideally, it should incorporate hand and body poses unless you want to roam the world without directly interacting with the object.

Although not impossible in theory, the model may require much wider training datasets and significant architectural changes.

Also, of course, you need to output a stereoscopic image. However, the other eye can be synthesized either by conventional techniques such as AI view synthesis or Yolo.

Latency is also a concern, but Google claims that Genie 3 has 50ms end-to-end control latency. This should not be a problem if the future model runs at 90 fps and can be combined with VR reply.

Google also shows that Genie 3’s action space is limited, and it cannot model complex interactions between multiple independent agents, and it cannot simulate real locations with full geographical accuracy. These issues are described as “ongoing research issues.”

However, there is another much more fundamental problem with AI’s “world models” like Genie 3 that limits the scope. So traditional rendering won’t disappear anytime soon.

The question is called maneuverability – how closely the output matches the details of the text prompt.

In recent years, we may have seen impressive examples of very realistic AI image generation, as well as in recent months that AI video generation (such as Google Deepmind’s VEO 3). However, if you are not using it yourself, you may not notice that while these models follow your instructions in a general sense, they often do not match the details you specified.

Furthermore, even adjusting and removing the prompt often fails when the output contains unnecessary things. As an example, I recently asked VEO 3 to generate a video with someone having a hot dog with only ketchup and mustard. But no matter how harshly I emphasize the details, the model doesn’t produce a hot dog without mustard.

Traditionally rendered video games allow developers to see exactly what they are trying to see. The art direction and style details create a unique atmosphere for the virtual world. This is often accomplished through hard work through years of refinement.

In contrast, the output of the AI model comes from the potential space shaped by patterns of training data. Text prompts are closer to higher dimensional coordinates than truly understood commands, so they do not exactly match what the artist had in mind. This becomes even more difficult when rapid world events are involved.

Of course, the maneuverability of the AI world model will also improve over time. However, it is a much more demanding task than strengthening the resolution and memory horizon, and may never allow precise control of traditional game engines.

Prompt: “In the classroom on the blackboard in front of the room, there is a Genie-3 memory test and below it is a beautiful picture of an apple chalk, a coffee mug and a tree. The classroom is empty except for this.

Still, it’s stupid to not see the appeal of the ultimate photorealistic interactive VR world, which can exist by simply speaking or typing descriptions, even with maneuverability issues. The AI World Model appears to be uniquely positioned to realize Star Trek’s holodeck promise, even assets that generate AI for traditional rendering.

To be clear, we are still in the early days of the AI “world model.” There are some big challenges to solve, and it will probably take years for VR-available arrivals that can run hours on your headset. But the pace of progress here is surprising, and the possibilities pique the appetite. This is a field of research that we are extremely meticulous.