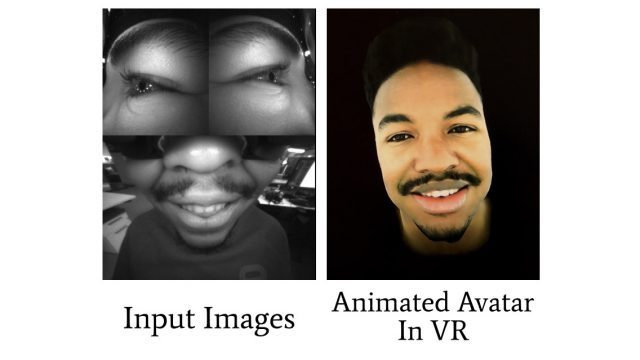

Apple is bringing a significant visible improve to the Visionos 26’s Imaginative and prescient Professional Persona Avatar. It is not tough to be impressed after seeing the brand new system in individual. Nonetheless, there are nonetheless main questions. How can we overcome the problem of bringing this degree of constancy to small headsets, which suggests even much less room for cameras, that are important to the sort of expertise?

Visionos 26 persona nonetheless raises the bar

The present persona system in Visionos 2 was already probably the most real-time digital avatar system accessible available on the market. Nonetheless, Apple has raised its personal bar with the Persona replace that seems in Visionos 26. In truth, the corporate could be very happy with the results of eradicating the “beta” tag from the Persona function.

Final week at WWDC, I used to be capable of check out new Persona expertise myself. I’ve to say it appears to be like the identical as they confirmed off of their first public footage.

https://www.youtube.com/watch?v=irgpj4ojphm

Notice: When my mouth is blurry, that is as a result of I put my arms in entrance of it, obscuring the view of the digicam within the headset’s downwards. And if the motion you are taking a look at appears to be like “unnatural”, that is as a result of! I used to be deliberately posing with unusual actions and posing to see how properly the system interpreted them.

Even with the identical seize process, the identical digicam on the headset, it handles all the pieces on-device, however the outcomes are clearly improved. The pores and skin appears to be like far more detailed. I used to be notably impressed by the way it captured my stubble. The hair on the top can be extra detailed.

However greater than that, Apple’s Persona system captures facial actions with spectacular element. You may see me shifting my face in a uncommon, asymmetrical method, however the outcomes nonetheless look delicate and practical. It’s not actually clear whether or not movement mapping has been up to date on this new model of Persona, or whether or not it merely appears to be like extra practical because the underlying scans are extra detailed.

I additionally checked Apple The highway to VR These enhancements might be carried over to the model of the Persona utilized in exterior “imaginative and prescient” shows. Additionally, whereas the brightness and determination of exterior shows are largely limiting components now, the persona displayed outdoors the headset ought to look somewhat extra detailed and practical.

Total, the persona’s sense of “ghosts” seems to be considerably lowered. However the arms nonetheless look ghostly (and there is much more to it than in any other case, as a result of there is a higher distinction between the blurry hand and the solidity of the face).

How does this scale make a small headset?

That is an apparent leap within the visible high quality of Persona, however the massive query in my thoughts is, how can Apple preserve this high quality bar sooner or later?

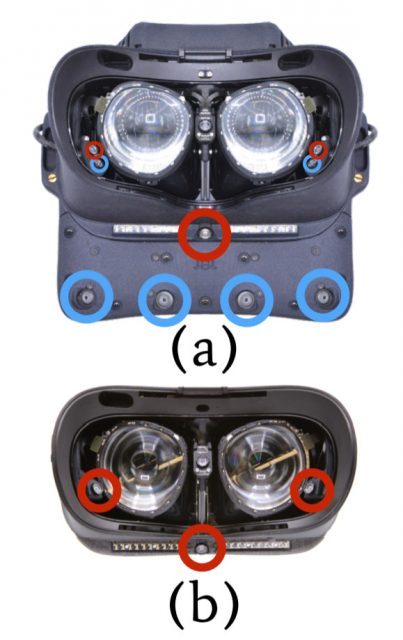

it isn’t simply That extra compact headsets have to be extra energy environment friendly in an effort to carry out the identical quantity of computing in smaller packages. Additionally, the smaller the headset, the much less house you should match the digicam.

The necessary factor that makes a persona attainable is that the headset digicam has a line of gazes on the consumer’s mouth, cheeks and eyes. It is a uncooked “floor reality” view that must be interpreted to get a good suggestion of how face actions are mapped to digital avatars.

When you’ve got an ideal frontal picture of somebody’s face, this is not too tough. Nonetheless, because the angle of the view turns into extra excessive, it turns into increasingly difficult. Subsequently, early face monitoring methods often had a dangling digicam. methodology In entrance of the consumer (and subsequently there could also be a transparent, undistorted view).

Even some fashionable face monitoring headset add-ons dangle the digicam fairly far out of your face for a extra clear view.

If you wish to make your headset smaller, the digicam strikes nearer to your face. Which means the “floor reality” information from the digicam is from a really sharp angle. The sharper the angle, the harder it’s to map actions to the consumer’s face.

However companies are getting smarter. For headsets like Quest Professional and Imaginative and prescient Professional, one choice to deal with this “sharp angle” floor reality difficulty is to coach the algorithm by concurrently checking each a transparent view of the consumer’s face and a pointy angle view of the face. This enables the algorithm to raised predict how the clear view maps to the sharp angle view.

This type of strategy works with headsets similar to Quest Professional and Imaginative and prescient Professional. This stays properly sufficient that the downward-facing digicam can see sufficient to work with the additional coaching.

Nonetheless, the longer term route of the headset refers to goggle-sized glasses-sized units. This may already be seen on PC VR headsets just like the Bigscreen Past. It’s clear that mounting the digicam on the farthest fringe of the headset doesn’t provide you with a very clear view of the mouth. And as we get smaller, the view turns into utterly blocked.

The one factor right here is that solely the eye-opener is protected for a very long time. XR is primarily mediated by the eyes, so the eye-catching digicam is nearly at all times properly angled to see the consumer’s eye actions.

However practical avatars are clearly what individuals wish to talk remotely in XR. To attain this, you should have an entire facial monitoring, not simply eye-opening.