SNAP OS 2.0 is now on sale and brings the AR platform closer to consumers by adding and improving first-party apps such as browsers, gallery and spotlights.

If you’re not familiar with it, the current Snap Spectacle is $99/month AR glasses for developers ($50/month if you’re a student) aimed at enabling the company behind Snapchat to develop apps for Specs Consumer products that are expected to ship in 2026.

The glasses incorporate an oblique vision of 46°, angular resolution comparable to the Apple Vision Pro, relatively limited computing power and a battery life of just 45 minutes. It is also the bulkiest AR device with a “glasses” form factor, weighing 226 grams. This is almost five times more heavy than Ray-Ban Metagrass, and is clearly due to a completely unfair comparison.

SNAP says it will launch consumer AR glasses called specs in 2026

The company behind Snapchat says it will launch a completely standalone consumer AR glasses next year called Specs.

However, SNAP CEO Evan Spiegel argues that the consumer specs have “more capacity for just a small portion of the weight” and all the capabilities developed so far while running all the same apps. So perhaps more important to track this here is the SNAP OS, not the developer kit hardware.

Snap us

The SNAP OS is relatively unique. APKs cannot be installed while being Android-based at the underlying level, so developers cannot run native code or use third-party engines such as Unity. Instead, they use Lens Studio software for Windows and MacOS to build a sandboxed “lens,” the name of the company’s app.

In Lens Studio, developers interact with high-level APIs using JavaScript or TypeScript, while the operating system itself handles low-level coretechs such as rendering and core interaction. This has many of the same benefits as shared spaces on Apple’s Visionos. An easy implementation of instant near app launch, interaction consistency, and frictionless shared multi-user experience. You can also use the Spectacles mobile app as an audience view for any lens.

The SNAP OS does not support multitasking, but this is more likely to be a current hardware limitation than the operating system itself.

Watch Snap Spectacle Peridot play together and use GPS

Six months later, Snap’s AR Spectacle for developers and educators gained major new features, including GPS support and multiplayer Peridot.

Since releasing the SNAP OS with the latest Spectacles last year, SNAP has focused on adding features for developers building lenses.

For example, in March, the company used GPS and compass headings to build an outdoor navigation experience, detect when users have a phone, and generate a system-level floating keyboard for text input.

Video recorded by uploadVr in Snap London shows Gemini multimodal AI integration with depth cache. Note: Spectacles record a much wider field of view than you can see. In reality, you can basically only see virtual content directly.

In June, SNAP added a suite of AI capabilities, including AI speeches to text in over 40 languages, the ability to generate 3D models on the fly, and advanced integration with Google’s Gemini and Openai’s ChatGPT visual multimodal capabilities.

This includes a depth cache of image requests, allowing developers to pin information from the responses of these AI models in real space.

Snap 2.0

Rather than focusing on SNAP OS 2.0 on the developer experience, we are now closing our first-party software offering as a step towards the launch of consumer specs next year.

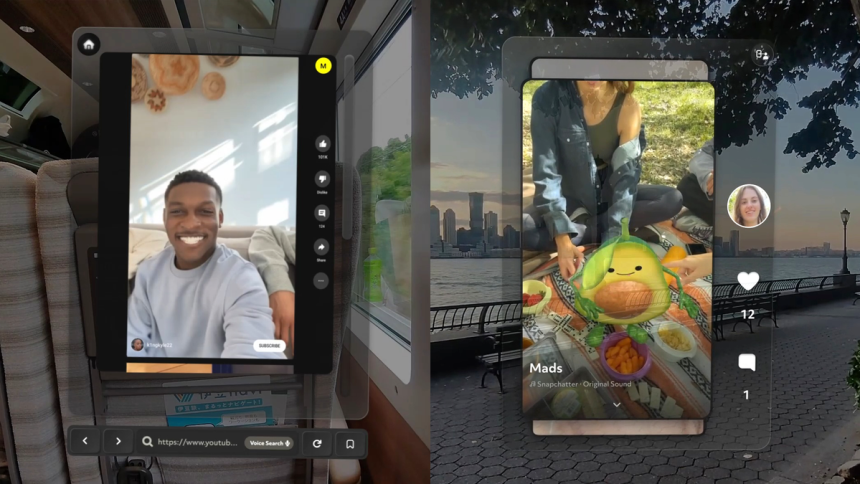

SNAP OS 2.0 upgrade browser and travel mode are enabled. Note: Spectacles record a much wider field of view than you can see. In reality, you can basically only see virtual content directly.

SNAP OS has a travel mode, and when enabled, position tracking works properly on moving vehicles such as planes and trains. Apple first launched the feature on Vision Pro while Meta and Pico continued. Snap says it should work on planes, trains and even cars.

The SNAP OS web browser has been overhauled to be “faster, more powerful, and easier to use”. Currently, there are widgets and bookmarks and the ability to change the window to the desired aspect ratio.

Snap’s browser also supports WebXR, bringing a cross-platform, web-based experience to your glasses. With limited onboard calculations, don’t forget that this WebXR support is intended for a relatively simple AR experience rather than a vast, immersive game.

SNAP OS Gallery App. Note: Spectacles record a much wider field of view than you can see. In reality, you can basically only see virtual content directly.

New Gallery lenses allow you to capture your glasses and share them with others on Snapchat. However, the glasses still only record 30 seconds of footage from inside the lens and do not support general image and video capture.

The gallery lens will not display your captured Snapchat images and videos from your phone, as you might have expected. I asked for a snap about this and they said it was an interesting idea.

SNAP OS Spotlight App. Note: Spectacles record a much wider field of view than you can see. In reality, you can basically only see virtual content directly.

Another new first-party app for Snap OS 2.0 is Spotlight. This is equivalent to the Tiktok and Instagram reels on the Snapchat phone app. The current Spectacles hardware’s field of view is vertically higher than horizontally wider, making it ideal for watching vertical videos like this.

Synthrider

In addition to the announcement of a new operating system, SNAP also revealed that XR rhythm game synth riders are appearing on the platform.

I was able to try out Synth Riders on Snap London with glasses. It’s not a complete synthrider experience to take part in Quest, PC VR, or Apple Vision Pro, but it’s a fun adaptation that shows developers are beginning to learn to use Snap’s tools.

Video recorded by uploadVr on Snap London. Unfortunately, glasses can only record for 30 seconds. Note: Spectacles record a much wider field of view than you can see. In reality, you can basically only see virtual content directly.

As an aside, be aware of the way virtual objects emit light into the furniture beneath them. This is because the SNAP OS provides a continuous scene mesh for the lens, making it naturally fit into the geometry of the real world.